For as long as there have been computers, I’m sure people being trying to create automatic LEGO sorting machines. In itself this is a huge challenge but there have been quite a few fantastic efforts. There’s manual sorting devices (you can print your own here). People like Daniel West built an amazing machine 4 years ago. There’s even a discord server for the Great Brick Lab – a collection of people working an open source version.

Since November 2022, the world has been abuzz with the power of Artificial Intelligence (AI) with the launch of ChatGPT. AI and Machine Learning (a subset) has been around for a long time. However ChatGPT brought it into the reach of the common user. AI advances directly relate to the likelihood of success in building an automated LEGO sorting machine for the masses (us!).

Before you head on, don’t forget to check out the 3D printed LEGO Minifig Hexagons and Sorting Shaker that you can download for free and 3D print at home.

Pre Warning: Technical concepts ahead

The next few paragraphs dig a little into the technical challenges. I’ve tried to take quite technical ideas and reduced them as much as I can. Hopefully this makes the concepts easier to understand. In doing so, technical readers may feel I’ve butchered the concepts. It’s quite possible I have, so feel free to let me know where. Or if there’s a better way to explain.

If you’re not interested in any of the technical info, and just want to check out my interview with Piotr Rybak – the creator of Brickognize: an AI driven LEGO Brick recognizer, you can jump straight there! If you’d prefer to keep reading, it provides great info and I promise I’ll get there in the end.

LEGO sorting machine Challenges

There is a reason that you can’t get buy a fully automatic LEGO sorting machine – It’s really hard to build! The challenges are vast. The problem set is broad. Particularly where you are trying to do so at a cost effective price point.

Amongst the many challenges in this mission, two are key in building a LEGO sorting machine. The mechanical aspects and the brick recognition. With over 80,000 different types of LEGO bricks, a machine that works for a 1 x 1 stud, is unlikely to be able to easily support a 16 x 2 brick. Putting aside the mechanical challenges, there’s a more fundamental issue: how do you even work out what each brick is?

The answer is to get your kids to look at each one, then manually sort them for you! Ok, that rarely works for more than about 2 minutes. So the real long term solution is to utilise an additional subset of machine learning called Computer Vision. There is an absolute mountain of information available on this field but specifically three fields are helpful:

Deep learning (machine learning using artificial neural networks to learn complex patterns from data)

Convolutional neural networks (CNNs) (deep learning architecture designed for processing image data) and

Object detection algorithms (working out where something is in a frame). You could spend years learning about each if you want to get deep into the technical elements of each.

Daniel has written two excellent articles about the challenges he faced in his quest. How I created over 100,000 labeled LEGO training images and A high-speed computer vision pipeline for the universal LEGO sorting machine. Both are very enjoyable reads and highly recommended.

How Computers see

There have been many significant advances in computer vision in just the last 12 months alone, but fundamentally computers do not see the same way we do. If you gave the following data set to a 5 year old child, they could quickly tell you these are all the same bricks. Even though the rotation is different, lighting is different, angles are different etc.

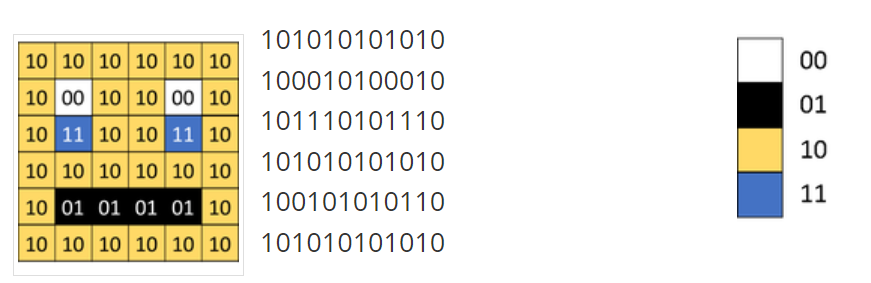

However, to an untrained computer, these are all completely different things. The computer will convert these images into binary (ultimately a lot of 1’s and 0’s). A quick primer can be found at Teach with IT: Binary Representation of Images.

This is where training a machine learning model and the need to build a large quality data set for the model to learn from can help. The challenge is compounded with LEGO pieces as many of them look very similar. Particularly if viewed from a certain angle.

Is the brick below a 2 x 2 plate or a 2×2 brick?

What about this brick? Is it a 1 x 2 brick, or a 2 x 2 brick, or even a 16 x 2 brick?

You’ll quickly see one of his Daniel’s challenges was how to create a data set to train the algorithm to correctly detect each LEGO brick. He’s put together another great video here on his synthetic data creation efforts. This is not a challenge unique to Daniel, but literally everyone trying to build an automated LEGO sorting machine.

For those playing at home, this roughly translates to:

* We need to scan each brick to teach the AI model what each brick is. Each brick would need to be scanned (ideally) MANY, MANY times. Let’s just say 2000 images. Generally the more the better, although that’s not always the case. We’ll ignore introducing bias to models for now as it’s a rabbit hole that will take us far off course.

* If we assume 80,000 LEGO bricks, and we want 2,000 images each, that’s 160,000,000 images.

As you can imagine, this would take a monumental effort. So rather than do this manually, creating a synthetic (not real) data set of images could rapidly enhance this effort. Think 10,000x speed improvement. Essentially, you’re combining real LEGO bricks with rendered versions of 3D models of LEGO bricks. With a rendering engine, the entire 360 view of a brick could be quickly simulated in many shapes, lighting conditions etc.

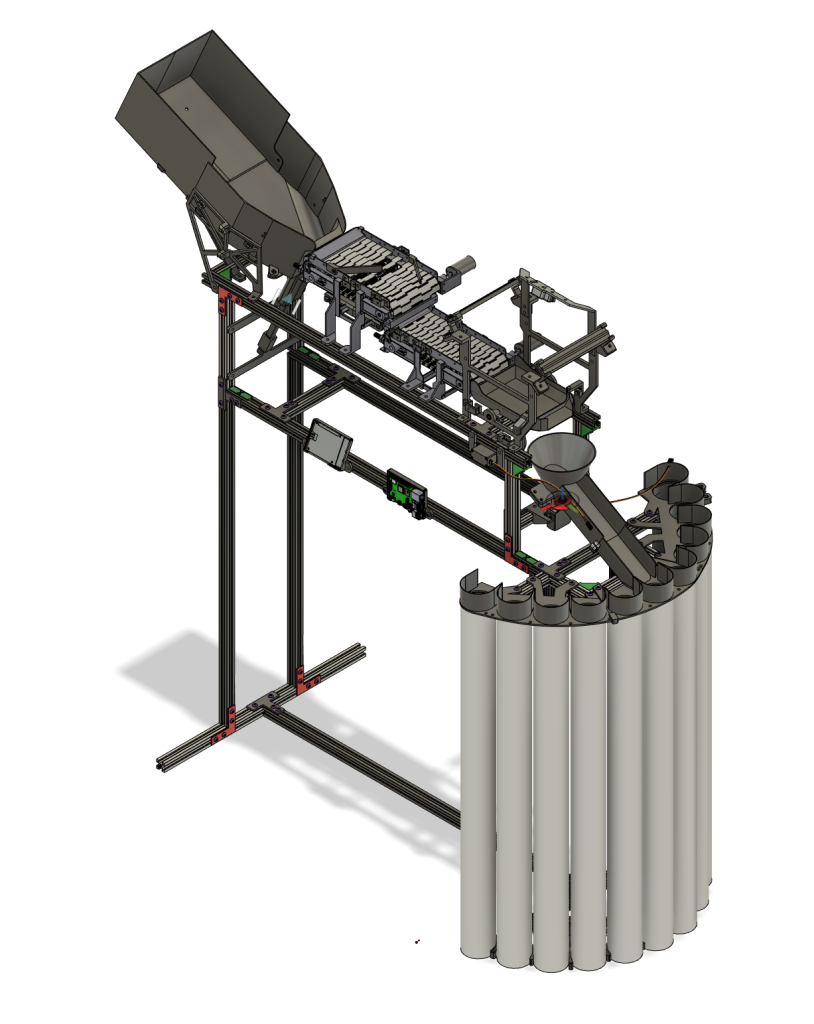

My LEGO SORTING MACHINE

For a while, I spent quite a lot time in my attempt to build my own LEGO sorting machine. I was pretty happy with my progress, but ultimately realised the there are some key challenges that were insurmountable with the time I had to invest in the project. I think I got around 70% of the way there, with a clear view of how to get to 100%, but that last 30% would still take a monumental effort.

My goal was to build a machine that would be available for less than $1000 AUD that you could mostly print at home by yourself. Much of the cost included components like the Raspberry Pi, motors, Arduino and more. One of the key challenges was implementing a system that recognised each brick using a single camera to keep costs down. When I started the project, I planned to build my own AI model but creating the training set (plus my limited coding) made this part of the project like swimming through treacle. Not impossible, but certainly a challenge. So I shelved the project, but the printed contraption into the shed and accepted defeat (at least for now). Those that know me, know how hard it is to accept defeat!

It would be remiss of me not to mention the amazing education I received through the team at PyImageSearch. I’ve purchased many of the series over the past few years and it provides an excellent combination of fundamentals, hands on examples and real projects you can build.

Enter Brickognize!

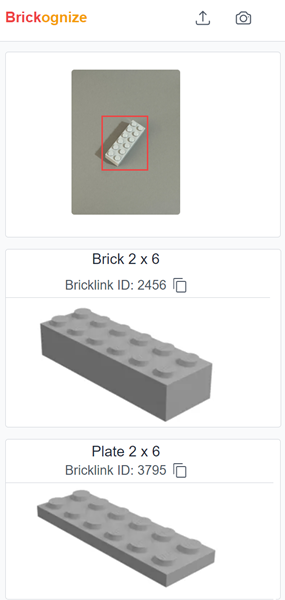

Recently I came across Brickognize, an online based LEGO Brick recognising tool. Built by Piotr Rybak, it allows users to upload images and Brickognize will tell you what it is. It’s utterly fantastic, currently available at no charge and also supports an API. API support means you could effectively “outsource” the hardest part of my challenge above and just focus on the mechanical challenges in the build.

Not only is the functionality amazing, in my testing so far, Piotr has made massive steps forward in matching the right brick to the right result. I was (and am) so impressed by his efforts, we got talking about the project and his future plans. Enjoy!

I’m a huge fan, but for those who might not know what Brickognize is, can you tell us a little about it?

Brickognize is an image search tool for LEGO that recognizes almost all current and historical bricks, sets and minifigures. You simply take a picture of an unknown brick and it will recognize what it is. And that’s no easy task, because there are over 80 thousand of them.

But that’s not all. Brickognize is available both as a website and as an API (Application Programming Interface). It means that any application can use Brickognize to recognize Lego bricks. This opens a lot of possibilities for other programmers to build awesome apps for Lego fans.

Why did you create Brickognize? Was there a spark of inspiration that kicked off the project?

I always wanted to build a LEGO sorting machine to organize my ever-growing pile of unsorted Lego bricks, but I had no idea how to write software to automatically classify Lego bricks. That changed when I started working in machine learning almost 10 years ago. I had some experience with classification, so I thought I should give it a try.

And I failed miserably. It was much harder than I thought. Two years ago, I took a sabbatical to relax and work on some side projects. Since it was just after I participated in the Polish edition of Lego Masters, I thought it would be great to return to this idea of recognizing LEGO bricks.

Have you always been a fan of LEGO? Do you have a favourite set, theme or series?

Definitely! I have been a LEGO fan for as long as I can remember. I started building with my older sister’s bricks, but my parents quickly noticed that LEGO was my favorite toy and started giving me LEGO sets for every birthday, Christmas, etc.

Since I was also a fantasy nerd, I always loved the Castle theme the most. As I got older, I also spent a lot of time building with Lego Technic. For a couple of years I was active in a Truck Trial community. We built remote-controlled LEGO trucks and raced against each other on special outdoor tracks. Today, I focus mostly on building MOCs.

Machine learning has come a huge way in the last few years. One of the challenges with LEGO is the sheer volume of bricks of available. Traditionally training involved using real training data. Can you tell us a little about the challenges you faced and how you tried to solve them?

The lack of training data was definitely the biggest challenge. There are almost no datasets with images of real Lego bricks, and the available ones are very limited. I approached the problem from two directions. First, I collected as much data as possible, including taking pictures of my own bricks and making renders of LDraw models. But that was still not enough. So instead of training an image classifier, I decided to build an image search engine.

There is a big difference between the two. To train a classifier, you need a lot of images of every single brick, so that it learns what each brick looks like. But to train a search engine, you only need to learn whether two images represent the same brick. This greatly reduces the amount of training data you need. Then you only need a single image of each brick to perform a search.

Can you train the model on just a single image?

I need at least two images as the bare minimum. But of course more images per brick is better. I still spend a lot of time trying to find as many real images as possible. But many LEGO bricks are not retired and difficult to come by. The key is in the approach we touched on above. Rather than training the model on the specific images, we convert each brick to a vector and then train the model on the vector representation. Lot of images of the same brick would result in a “sharp” vector, but fewer images would result in a “fuzzy vector”.

Then the search engine takes the image submitted by users, converts it to a vector via an image encoder and finds the closest match. The model can generalise for those bricks with a low sample size (e.g. a single image). There’s a few other techniques I use such as a low number of epochs to avoid over-fitting the data. Originally I only had 12k bricks, but I’m now up to around 60k!

(Adam Note: for the question above, I paraphrased a lengthy discussion on Discord with Piotr for brevity, but the concepts should be there)

What surprised you the most about your Brickognize journey so far?

Many things! First, how difficult it was to get it to work reasonably well. I originally planned to build Brickognize in three months, but it took over a year to release the first version. And another year to improve it. But what kept me working was the amazing feedback from users. I never imagined that it would become so popular and that so many people would find it useful.

Another thing that surprised me was how difficult it is to recognize standard bricks, for example, to distinguish a 2 x 4 brick from a 2 x 3 brick. To the machine learning models, they are really similar. I initially spent a lot of time trying to solve this problem, but I realized that it was unnecessary. Users just don’t try to recognize them, they already know what they are. They care about decorated or rare bricks, which are much easier to recognize.

Do you get feedback from Brickognize users and fans? How should people get in touch with you with ideas they have for the service?

Yes, and this is one of the best parts of building Brickognize. It’s always a pleasure to talk to the users. Even if the feedback is negative, it means that they use Brickognize and care about it!

If you have any feedback or ideas on how to improve Brickognize, send me an email at: piotr.rybak@brickognize.com

Do you have any future plans for Brickognize?

In the short term, I plan to improve the experience for the Lego sellers. This will include recognizing multiple bricks on a single image, saving the recognized bricks to your account, and being able to export them to Bricklink. There is also a lot of maintenance work to be done to keep the database of bricks up-to-date etc.

I know a lot of users want me to make mobile apps as well. This will happen eventually, but I have to implement all the planned features on the web version first. Making changes on three platforms (web, iOS, Android) is just a lot more work.

For the technical readers, is there any more info you’re happy to share?

Sure, I’m pretty open about how Brickognize works. I currently use the ConvNeXt-T model for image recognition, but there is nothing really special about this particular model. Other modern ML models for vision achieve comparable results. It’s also quite small by today’s standards (28 million parameters), but that means it’s fast.

The training process is implemented in PyTorch using the Lightning framework and takes a few hours on the A100 GPU. As for hosting, I use a small CPU-only server on OVH. It’s much cheaper than hosting in the cloud and I try to be as cost-efficient as possible. However, as the traffic on Brickognize grows, I plan to migrate to a more powerful server soon.

For those who are more interested in the ML details of Brickognize, I recommend my talk from last year’s EuroPython conference.

Currently, brickognize is free to use. It’s amazing. Is there any way people can send you donations as thanks for your service?

I plan to keep Brickognize free for casual use, but I’m also working on introducing paid accounts to help cover the costs. I haven’t figured out the pricing policy yet, but I’d like to allow both one-time donations and a more recurring payment. I will definitely ask for feedback on this when I am closer to the release date, but if any of you have any ideas now, please let me know!

AI is a huge mega trend at the moment. Have advances such as LLM’s helped you develop Brickognize or speed up your workflow?

Since Brickognize is mostly about image classification, the LLMs are not much help. I used them a few times to write some front-end code, but that’s it. There are some multimodal models (i.e. working with text and images), but they were not really useful for any real application related to Lego bricks. However, I plan to work more with the Stable Diffusion models to generate new training data.

Thanks so much Piotr. Keep up the amazing work!

Hi.

This is really exciting. I’ve been wanting something like this for years – ever since I saw Daniel West’s YouTube video.

It sounds like you’ve had some success with your machine. I’ve been thinking lately about making one myself but I don’t have a 3d printer, and lack the skills in AI and robotics etc.

You say in your article that you wanted it to cost under AUD 1000 to make. How much would a complete working machine cost someone like me to buy?

Gday Marty,

It’s a great question. Currently to my knowledge no-one has really been able to build a machine that could be bought by a consumer. The items like Daniel’s amazing machine take a huge amount of ongoing tweaking and are really suited for a hobbyist who loves to tinker.

I think if mass produced, a working machine might be around the $1500-$2000 mark, but again, so many variables.

I’m eager for when such a machine arrives!